You might have faced a situation where a Product Manager slides into your Slack with a "quick question" about making the cancel button "less prominent."

Or your engineering lead suggests hiding the unsubscribe option behind "just one more click." Leadership wants to know why conversions dropped after you redesigned that modal to be more transparent.

If you never had to face this, congratulations! You have an amazing team.

The pressure to manipulate users isn't new, but it's getting harder to spot. The tactics have evolved. They're subtle now. Wrapped in the language of "friction reduction" and "conversion optimization."

Here's what makes this tricky: persuasion is part of good design. You should guide users toward beneficial actions. But somewhere between a helpful nudge and outright deception, designers cross a line that damages trust, tanks retention, and increasingly, violates regulations.

The question isn't whether to persuade users. It's how to do it without destroying the relationship you're trying to build.

COOL THINGS WE DID

We just wrapped up product design for Itair, creating 80+ screens across desktop and mobile for their AI-powered travel platform.

Who It's For: Early-stage travel tech startups ($250K raised) needing to serve two distinct user groups - content creators monetizing travel videos and travelers booking AI-curated trips.

What We Did: Designed a complete product experience in 30 days with a single designer, creating minimalist workflows that seamlessly integrate video sharing, location tagging, and booking functionality for both user types.

The Result: Fully responsive product design system with 80+ screens that enables Itair to launch their dual-sided marketplace, connecting creator content directly to bookable travel experiences.

WHERE PERSUASION BECOMES MANIPULATION

Many Designers Can Spot A Blatant Dark Pattern.

No one's defending fake countdown timers or hidden costs at checkout. But the patterns causing real damage in 2025 operate in murkier territory.

Persuasive design respects user goals and provides clear paths to desired outcomes. Manipulative design prioritizes business metrics over user needs, often by obscuring information or exploiting cognitive biases.

Consider subscription interfaces. Offering an annual plan alongside monthly options is persuasive - you're showing value clearly. But when Audible requires users to navigate through six pages and a phone call to cancel while sign-up takes thirty seconds, that's manipulation through asymmetric design. The intent isn't to help users make informed choices; it's to exhaust them into staying.

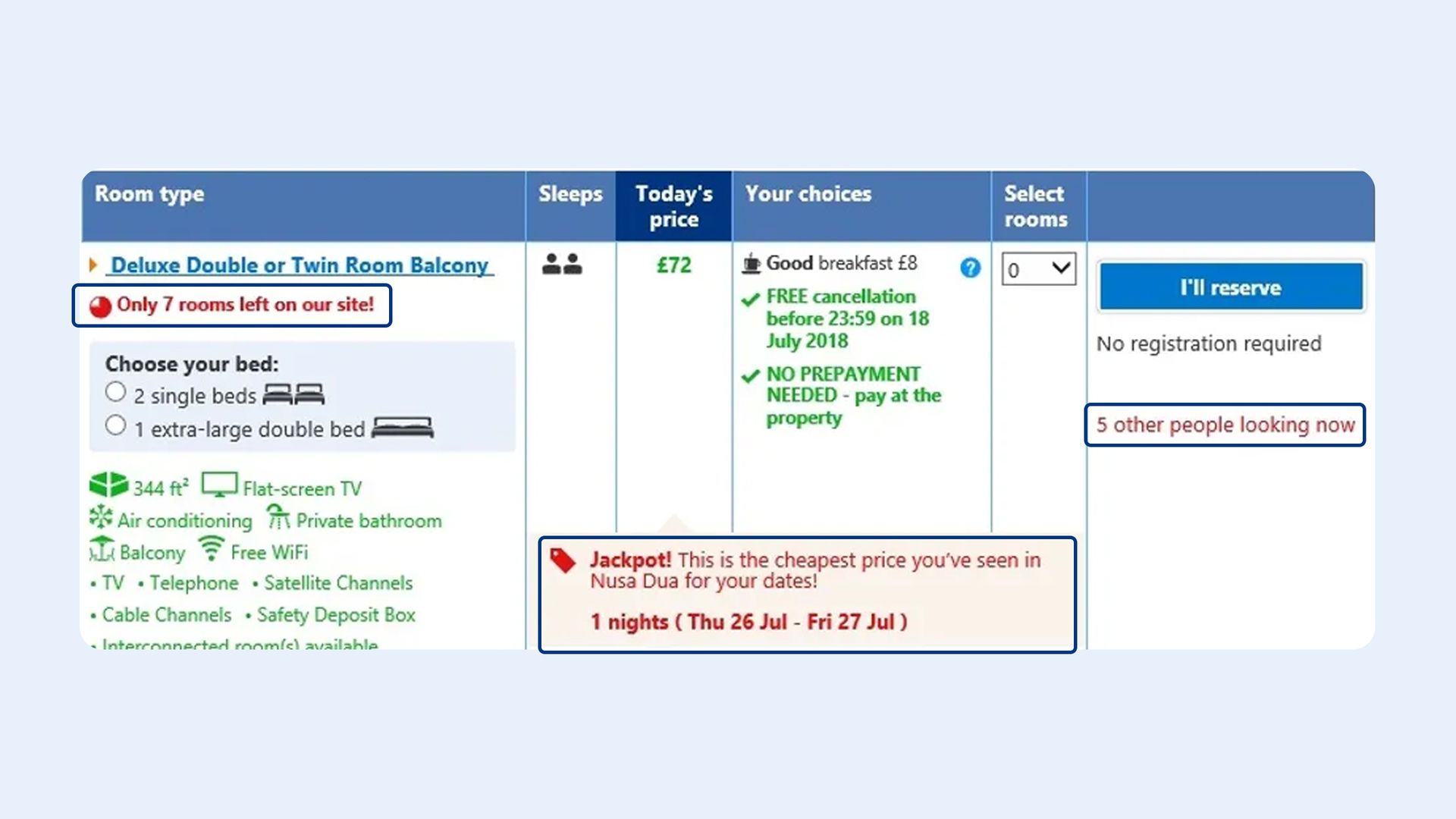

Source: Booking

The gray area gets interesting with social proof. Booking.com showing "23 people are looking at this hotel" can be persuasive if accurate and timely. When that number is inflated, outdated, or algorithmically generated to create urgency, it crosses into manipulation.

Your role as a designer includes knowing where this line sits. Not just for ethical reasons - manipulative patterns demonstrably hurt long-term metrics.

Find more marketing jobs on the TDP Job Board.

Source: Built In

DARK PATTERNS DESIGNERS ARE ASKED TO IMPLEMENT

Let's Talk About The Requests Landing In Your Backlog.

These aren't framed as "please add manipulative patterns." They come disguised as business requirements, A/B test hypotheses, or "industry standard practices."

The pre-checked box request.

Product wants newsletter opt-ins checked by default because "users who don't want it will uncheck it." Some users might not notice pre-checked boxes during sign-up flows. That's not informed consent - it's exploiting inattention.

The confirmation shaming pattern.

"No thanks, I don't want to save money" or "I'll pay full price" as decline buttons. Your growth team might call this "value reinforcement." Users can all it insulting. More importantly, it works through negative emotion rather than positive value communication.

The roach motel subscription flow.

Easy sign-up, nightmarish cancellation. The New York Times famously required phone calls to cancel digital subscriptions while offering one-click sign-up, which again introduces friction to retain users.

The disguised ad pattern.

LinkedIn's sponsored content that mimics organic posts. Twitter's promoted tweets without clear visual distinction. Your design system team might call this "cohesive experience design”. Users feel betrayed when they realize they've been tricked into engaging with ads.

These patterns share a common thread: they work in the short term. That's why stakeholders push for them. Your job includes understanding why they fail long-term, and having data ready when these requests arrive.

A PRACTICAL FRAMEWORK FOR ETHICAL PERSUASION DESIGN

You Need a Framework That Works in Sprint Planning, Not Just in Ethics Discussions.

Here's one that maps to actual design decisions.

The Autonomy Test.

Does your design help users achieve their goals, or does it prioritize business goals over user autonomy? Apply this to every flow. When Spotify prompts users to upgrade to Premium after they've encountered their skip limit, that's persuasive - users understand the constraint and the solution aligns with their goal of continuous listening. When Candy Crush shows a "Continue playing" modal immediately after a loss without clear dismissal options, that exploits moment of frustration rather than supporting user autonomy.

Test this practically: Can users easily accomplish their intended task without being pressured toward your preferred outcome? If canceling requires significantly more steps than subscribing, you've failed the autonomy test.

The Transparency Standard.

Are all costs, commitments, and consequences visible before the user commits? This goes beyond legal compliance. If one airline shows the full price (including mandatory fees) early in the booking flow while the other hides baggage fees until checkout, the former’s customer satisfaction scores would run higher.

Apply this to every conversion point. Free trial? Show exactly when charging begins, how much, and how to cancel - before collecting payment information. Privacy settings? Explain what data you're collecting and why, without requiring users to read a legal document.

The Benefit Alignment Principle.

Does the design benefit both the business and the user, or just the business? Slack's notification system suggests turning on Do Not Disturb mode after 6 PM. That potentially reduces engagement metrics. But it prevents burnout, makes the product more sustainable for users.

Look for win-win patterns. Notion's template gallery showcases use cases while helping users get started faster. Figma's community files increase engagement while providing learning resources. These patterns succeed because they're genuinely helpful.

The Reversibility Check.

Can users easily undo or opt out of the decision you're encouraging? Amazon's one-click purchasing is persuasive because it's paired with easy returns. If you're making sign-up easy but cancellation difficult, that imbalance signals manipulation.

Build exit ramps into every major flow. Privacy settings should be as accessible as initial permissions. Downgrade options should be as visible as upgrades. This isn't just ethical - it's practical risk management as regulations increasingly require symmetric processes.

The Cognitive Load Assessment.

Are you simplifying genuine complexity, or creating artificial complexity to guide users toward specific choices? If you're adding complexity to discourage a user-beneficial action, you're manipulating. If you're reducing complexity to help users make informed decisions faster, you're designing well.

Source: Mailchimp

AUDITING YOUR PRODUCT FOR MANIPULATIVE PATTERNS

You Can't Fix Patterns You Don't Recognize.

Most products accumulate dark patterns gradually - a growth experiment here, a quick fix there. Run systematic audits.

The fresh eyes test.

Have someone unfamiliar with your product complete critical tasks: sign up, change settings, cancel subscription, manage privacy. Watch them. Don't help. Note every moment of confusion, frustration, or surprise. These often signal manipulative patterns.

Record these sessions. Present clips to stakeholders. Watching actual users struggle hits differently than reading reports.

The transparency inventory.

List every point where users commit to something: purchases, subscriptions, data sharing, notifications. For each one, document: What information is shown before commitment? How many steps to commit vs. reverse the decision? Where are terms and costs displayed? Use a simple scoring rubric: Fully transparent, Partially transparent, or Hidden/Obscured.

The comparison matrix.

Map the user journey for key actions. Create columns for: Sign-up, Cancellation, Privacy opt-out, Downgrade. Count clicks, information presented, and time required for each. If any action takes significantly more effort than its opposite, you've found asymmetric design that likely constitutes manipulation.

The emotional pressure check.

Review all your microcopy, especially for decline buttons, cancellation flows, and opt-outs. Flag anything that uses guilt, shame, FOMO, or fear. Replace it with neutral language. Instead of "No, I don't want to protect my data," use "Skip for now." The functionality doesn't change; the manipulation does.

The regulatory compliance check.

Review your patterns against specific regulations: GDPR's consent requirements, CCPA's "Do Not Sell" provisions, the EU DSA's dark pattern prohibitions. Use the actual regulatory text, not summaries. Even if you're not currently subject to these regulations, they indicate where global standards are heading.

Create a tracking sheet: Pattern identified, Regulation it violates, Risk level (Legal/Reputational/User trust), Proposed fix, Owner, Timeline. Make it visible. Update it regularly. This transforms "someday we should fix this" into "this is actively being addressed."

The competitive ethical benchmark.

Audit 3-5 competitors specifically for how they handle key ethical decision points. Not to copy dark patterns, but to identify who's leading with ethical design.

The goal isn't perfection. It's systematic improvement and ensuring new features don't introduce new manipulative patterns. Schedule these audits quarterly. Make them part of your design system documentation. Treat them like accessibility audits - essential maintenance, not optional enhancement.

KEY TAKEAWAYS

Test every conversion point for autonomy:

Can users accomplish their goal without being pressured toward your preferred outcome? Map sign-up versus cancellation flows and count clicks - if the ratio exceeds 3:1, redesign for symmetry. Run this quarterly across all major user journeys.

Build a dark pattern audit into your design process:

Before shipping, check for pre-checked boxes, confirmation shaming, asymmetric flows, disguised ads, and forced continuity. Create a checklist integrated into your design system documentation. Make it mandatory in design reviews, just like accessibility checks.

Prepare business cases for ethical design before the conversation happens:

Compile data on customer lifetime value impact, regulatory fines in your industry, and competitor examples of ethical design working. Store these in a shared doc you can reference when stakeholders push for manipulative patterns. Update it monthly with new cases and data.

Replace emotional manipulation in microcopy with neutral language:

Audit all decline buttons, cancellation flows, and opt-out screens. Flag anything using guilt, shame, FOMO, or fear. Rewrite using straightforward language that respects user choice. A/B test to prove neutral copy performs equally well without the trust damage.

Document stakeholder requests for dark patterns and your recommendations:

When you raise concerns about manipulative patterns and they're overruled, send email summaries confirming the decision and your objections. This protects you professionally and creates an evidence trail that often makes stakeholders reconsider. Include specific regulatory risks and competitor examples in these summaries.

The design decisions you make about persuasion versus manipulation define your product's relationship with users. Not in abstract ethical terms - in concrete retention rates, support costs, and brand perception.

You'll face pressure to manipulate. That pressure comes with impressive-sounding business justifications and data showing short-term gains. Your response needs equally solid business arguments showing long-term costs. The regulatory environment is shifting decisively against dark patterns. User awareness is increasing. The companies building sustainable products are the ones treating users like partners, not targets.

This is about understanding that manipulative patterns are bad business disguised as good tactics. The data increasingly proves this.

Your responsibility extends beyond making interfaces look good. You're shaping how your company relates to users. That relationship either builds trust that compounds over time, or extracts value until users leave. The patterns you ship determine which path you're on.

Keep designing,